Business intelligence has a problem. Despite decades of investment in BI tools, most enterprise data analysis still follows the same basic pattern: someone has a business question, they ask a data analyst, the analyst spends days writing SQL queries and building dashboards, and eventually produces a report. If the initial analysis raises new questions - which it almost always does - the cycle repeats.

Meanwhile, we've seen remarkable progress in AI systems that can conduct autonomous research and analysis. OpenAI's Deep Research agents can investigate complex topics by planning multi-step research strategies, gathering information from diverse sources, and synthesizing comprehensive reports. Google's Gemini and other systems demonstrate similar capabilities for web-based research tasks.

But here's what's missing: none of these advances have meaningfully changed how businesses analyze their internal data - the customer transactions, inventory records, sales metrics, and operational data sitting in their data warehouses. Text-to-SQL systems can generate queries, but they can't conduct the kind of iterative, hypothesis-driven analysis that experienced data analysts do. They can't ask clarifying questions, adapt their approach based on initial findings, or provide the business context that makes analysis actionable.

We built Wobby's Deep Analysis Agent to bridge this gap - bringing the autonomous research capabilities we see in web-based AI systems to enterprise business intelligence.

While the broader AI community refers to these systems as "Deep Research" agents, we use "Deep Analysis" to emphasize our focus on data analysis rather than information gathering.

The Challenges of Applying Gen AI to Real Business Data

Traditional text-to-SQL systems have made impressive strides in academic benchmarks, with some models achieving over 90% execution accuracy on standardized datasets. However, these achievements come with a significant caveat: they operate in simplified environments that bear little resemblance to real-world enterprise scenarios.

Academic benchmarks typically feature small schemas (around 50 columns per database), single SQL dialects (usually SQLite), limited data type support, and simple queries averaging just 30 tokens. In contrast, enterprise data warehouses present challenges that break these assumptions entirely:

- Production databases often contain over 500 columns across hundreds of tables

- Organizations use diverse SQL dialects (BigQuery, Snowflake, Redshift) with platform-specific features

- Understanding requires domain knowledge, business definitions, and organizational context that goes far beyond table schemas

- Real business questions require multi-step analysis, hypothesis formation, and iterative refinement

The gap between academic success and enterprise deployment has persisted because building effective business intelligence tools requires a complete rethinking of how AI systems approach analytical work, beyond simple SQL generation.

Our Approach

We built what we're calling a Deep Analysis Agent for business intelligence. The core idea is straightforward: instead of just generating SQL queries, we wanted to create an AI system that could handle the full analytical workflow that a human data analyst would follow.

This means the agent needed to do more than just query data. It has to create visualizations, manage multiple parallel analysis paths, and synthesize findings into coherent reports. When investigating a business question, experienced analysts often pursue several hypotheses simultaneously - running different queries, creating various charts, and exploring multiple angles before drawing conclusions. We wanted our agent to work the same way, using parallel tool execution to investigate multiple aspects of a problem concurrently rather than following a single linear path.

The system architecture can be represented as a hierarchical multi-agent composition:

Deep Analysis Agent: Ψ = Orchestrator(I, C, P, Composer, R)

Where each component is an independent AI agent with specific responsibilities:

Intent Router: I(q) → {route, confidence} - An agent that classifies incoming queries and routes them to appropriate handlers.

Clarifier Agent: C(q, catalog) → {questions, context} - An autonomous agent that searches the data catalog to understand available data and business definitions, then generates clarification questions only when needed, such as asking about preferred metrics, time periods, or business definitions.

Planning Agent: P(q, clarifications) → objectives = {o₁, o₂, ..., oₙ} - An agent that creates a high-level research strategy where each objective extends beyond the literal question to provide comprehensive business value.

Composer Agent: Composer(objectives, context) → artifacts - The core analytical engine with iterative reasoning and parallel tool execution capabilities.

Report Writer Agent: R(artifacts, context) → comprehensive_report - An independent agent that synthesizes all artifacts produced by the Composer into coherent, business-focused analytical reports.

Each tool interaction updates both the analytical state and the artifact store A:A = {SQL results, charts, notepads, catalog data, progress tracking}

The system maintains persistent memory through artifact management, allowing complex analyses to build upon previous work, with automatic persistence of key deliverables for business stakeholders.

Architecture Decisions

We quickly realized that a single large model couldn't handle all the different aspects of the analysis flow effectively. Instead, we built a multi-agent system where each component has a specific job:

This turned out to be much more effective than trying to cram everything into a single AI Agent.

The overall system has two distinct layers:

- Layer 1 - Request Routing: Intent Router → {Quick Analysis, Deep Analysis, Other Handlers}

- Layer 2 - Deep Analysis Agent: Orchestrator(C, P, Composer, R)

Where the Deep Analysis Agent orchestrates multiple specialized sub-agents:

- C (Clarifier): Research question formulation and user guidance

- P (Planner): Analysis plan generation and decomposition

- Composer: Multi-tool analysis execution with parallel orchestration

- R (Report Writer): Business report synthesis and communication

Intent Router: The First Gate

Before diving into the Deep Analysis Agent architecture, it's worth understanding how requests get routed in the first place. Our Intent Router is a separate system that classifies incoming requests into categories like data exploration, specific analysis requests, result explanations, and system inquiries.

The Intent Router's job is simple: determine whether a request needs data analysis or something else entirely. If it's an analysis request, it gets handed off to the appropriate analysis agent (Quick or Deep).

Next: The Actual Deep Analysis Agent

When the Intent Router identifies an analysis request and routes it to Deep Analysis mode, that's when the real orchestration begins. The Deep Analysis Agent is a sophisticated multi-agent system designed to handle complex, multi-faceted business questions that might take 15-20 minutes to fully investigate.

The Clarifier Agent: Intelligent Question Formation

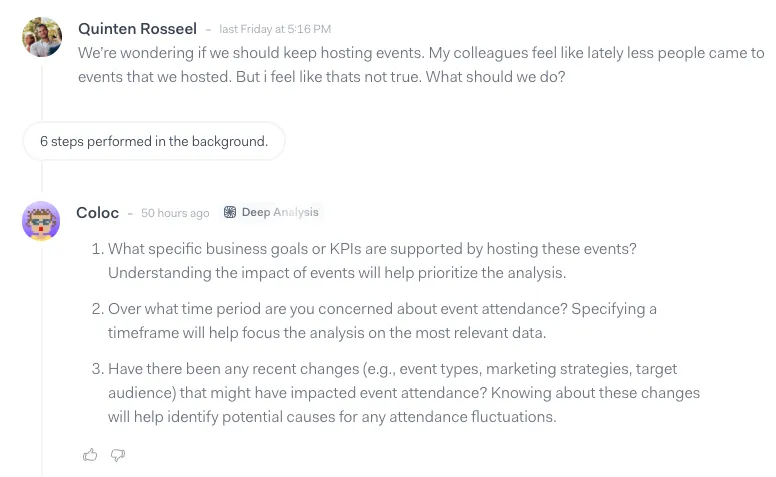

Business users often ask vague questions like "How are our stores performing?" without realizing how many different ways that could be interpreted. The Clarifier Agent solves this by being selective about when to ask for clarification.

.svg)

The Clarifier Agent first searches through the data catalog to understand available data patterns, business definitions, and existing metrics. Only when it identifies genuine ambiguity or missing business context does it generate clarification questions. For a store performance query, it might ask about specific metrics (revenue per square foot vs inventory turnover), geographic scope, or time periods - but only if the catalog doesn't already contain clear organizational preferences for these choices.

The agent distinguishes between technical questions (which tables contain store data, how to calculate metrics) that it resolves autonomously using catalog search, and business questions (which metrics matter most, what time periods are relevant) that require user input. This prevents overwhelming business stakeholders with technical details while ensuring the analysis captures the right business intent.

Finding the right balance proved challenging. Initially, the agent would ask too many technical questions that non-technical users couldn't answer - things like "Should I use the customer_transactions table or the sales_summary view?" Business users had no way to answer these questions meaningfully. On the other hand, asking too few questions led to analyses that missed crucial business context.

We spent weeks prompt engineering the Clarifier Agent to recognize when catalog information is sufficient versus when human clarification is genuinely needed for business terms, definitions, or analytical preferences.

Analysis Planning

After we get clarification from the user, we need to turn their business question into an actual analysis plan. This is where we try to be smarter than just taking the question literally.

If someone asks about store performance in the Northeast versus West Coast, we don't just compare those regions directly. The planning agent thinks about what additional context might be useful - seasonal patterns, store types, inventory dynamics - and builds those into the research agenda. The goal is to anticipate what follow-up questions the user would have after seeing the initial results.

We constrain this to 3-5 high-level objectives to keep it focused, but each objective is designed to build toward a comprehensive understanding of the business question.

Main Analysis Agent (Composer)

The Composer Agent operates through an adaptive ReACT loop with conditional human interaction.

Improving the Agent's attention span: Notepad

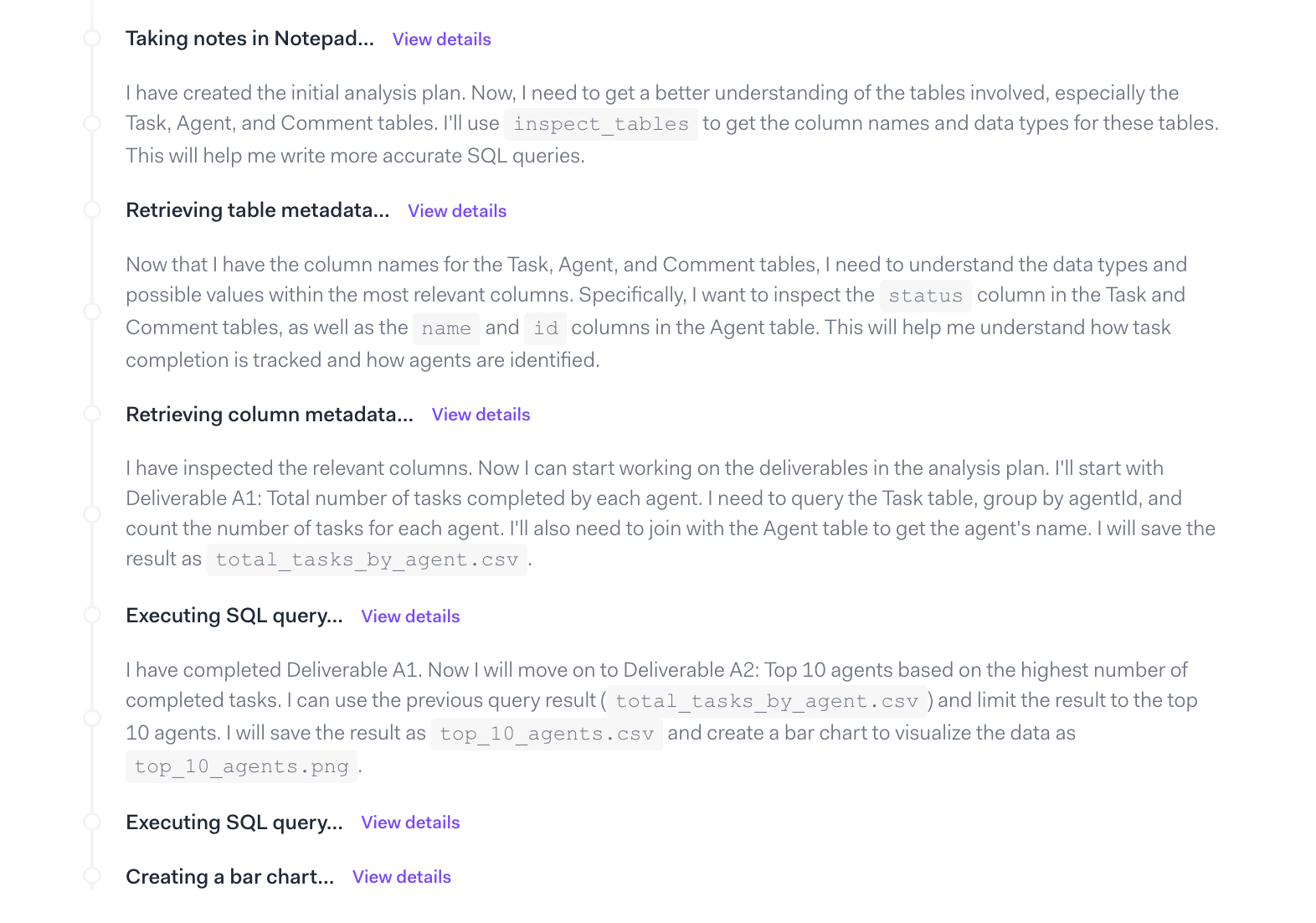

One of our key innovations is the "notepad system" for maintaining analysis context, where the agent maintains structured documents including a master checklist for deliverables and progress tracking, findings organized by research area, and interim discoveries and data quality observations.

Before implementing the notepad system, our agents would frequently "forget" earlier findings and repeat analysis work. Long sessions showed degradation as the agent lost track of what had already been discovered. The structured notepad eliminated this context drift, with agents now maintaining coherent analysis threads even across lots of iterations.

The most significant improvement came from the behavioral change the notepad induced. When we instrumented the agent to explicitly read its notes before making decisions, analysis quality improved. The agent began catching contradictions in its own findings, identifying gaps in its investigation, and making more thoughtful connections between data points.

This self-reflection capability naturally leads to the Agent’s ability to adapt the analysis plan dynamically as new insights emerge. While the agent starts with a linear plan (Research Area A → B → C), it can adapt based on discoveries:

Initial Plan:

[A] Revenue analysis (Deliverable A1, A2, A3)

[B] Cost analysis (Deliverable B1, B2)

[C] ROI calculation (Deliverable C1)

After A1 Discovery:

[A] Revenue analysis (✓ A1, A2, A3)

[C] ROI calculation (✓ C1) // Moved up due to A1 dependency

[B] Cost analysis (B1, B2)

This adaptability is crucial for real business analysis, where early findings often change the direction of investigation.

The agent systematically inspects table structures and column metadata before executing queries, ensuring analyses are built on solid data understanding. Rather than simply extracting data, it forms hypotheses about patterns and relationships, then designs analyses to test these hypotheses iteratively.

Report Writer Agent

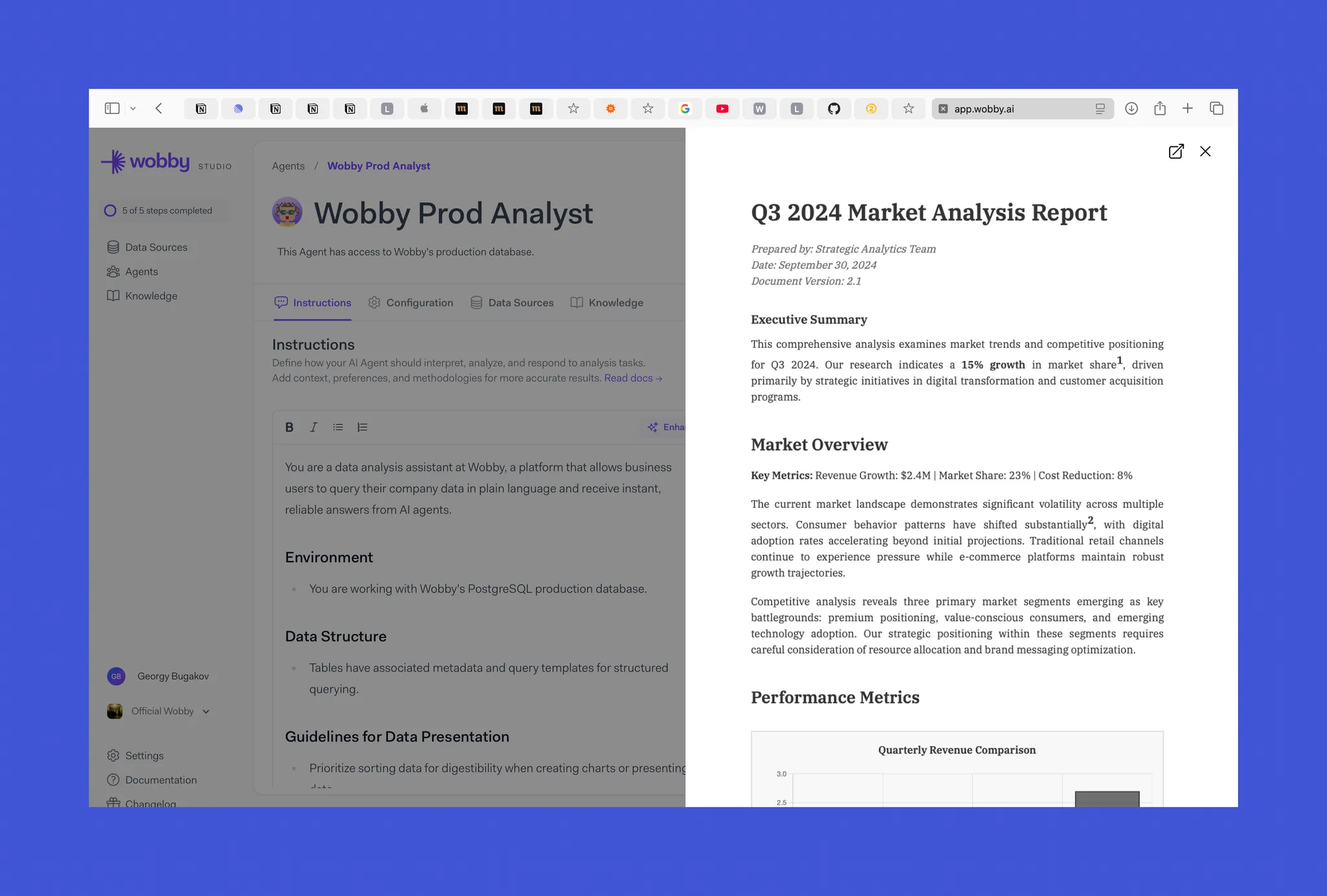

The final stage involves a specialized Report Writer agent that synthesizes all analysis artifacts into a business-ready report. This agent faces a unique challenge: translating technical findings into actionable business insights without hallucinating or over-interpreting the data.

Similar to Composer, the Report Writer operates in an iterative workflow, consolidating all accumulated artifacts into a cohesive business document that translates technical findings into actionable insights for stakeholders.

One innovation we're particularly proud of is our interactive report system, where the Agent can embed artifacts. Rather than generating static PDFs or plain text summaries, we created a rich markdown format that combines narrative analysis with interactive elements.

Our reports blend multiple content types:

- Narrative text that explains findings in plain business language

- Interactive charts that users can explore, filter, and drill down into

- Data tables that can be sorted, searched, and exported as CSV files

- Contextual tooltips that provide methodology details without cluttering the main narrative

We developed a custom markdown syntax that makes this possible.

Challenges

Engineering the Report Writer Agent proved more challenging than anticipated. Teaching the agent what constitutes "interesting enough" to report required extensive iteration. The agent initially flagged every small percentage change as 'significant', producing reports cluttered with minor fluctuations rather than focusing on business-relevant insights. LLMs by nature tend to be dramatic, verbose, and flowery in their language, which works poorly for business reporting where stakeholders need clear, concise insights. We spent months prompt engineering the agent to distinguish between statistical noise and meaningful business patterns while maintaining appropriately measured language.

Template flexibility presented another complexity. Rather than forcing every analysis into a static report structure, we needed the agent to adapt its reporting format based on the type of analysis and intended audience. A financial trend analysis requires different sections and emphasis than an operational efficiency study or customer segmentation analysis.

Additional, report-specific tools

We also equipped the Report Writer with additional tools, including a calculator function. LLMs struggle with multi-step mathematical calculations, often producing subtle errors when computing percentages, growth rates, or statistical measures in a single pass. By providing dedicated calculation tools, the agent can verify mathematical accuracy in its reports rather than relying on potentially flawed arithmetic reasoning.

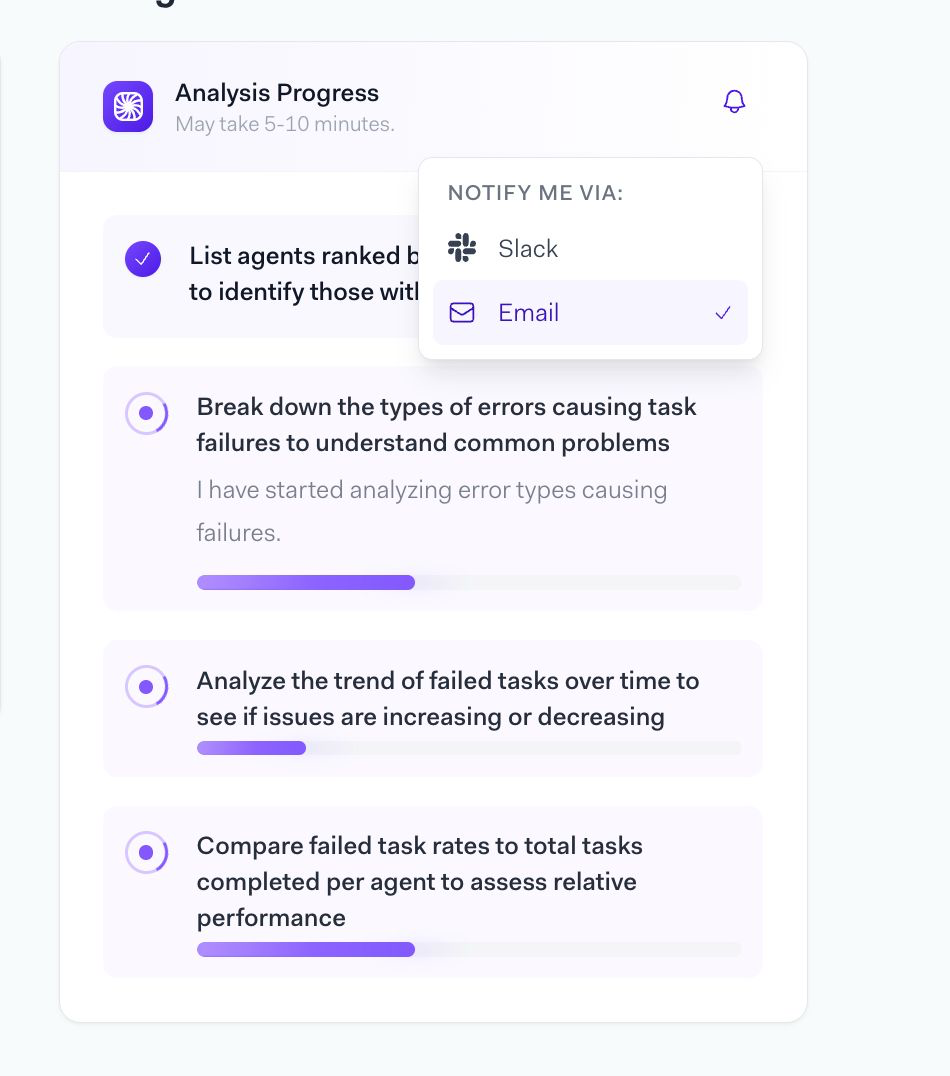

Progress Tracking and Transparency

Users need visibility into long-running analytical processes. Our Progress Tracker provides real-time updates by monitoring agent activity patterns and translating technical actions into business-friendly progress indicators. This addresses a critical gap in existing systems where users have no insight into complex analytical workflows.

Beta Testing Results

Our beta testing with customers has provided valuable insights into both the potential and current limitations of our product.

One retail customer used our Deep Analysis Agent to investigate inventory optimization across their store network. While Wobby's existing quick analysis mode could identify basic stock levels and turnover rates, the deep analysis provided additional context. The agent noted different seasonal patterns between tourist areas and suburban locations, though validation showed some patterns needed business context to interpret correctly. Analysis flagged potential connections between promotional activity in different product categories, requiring further investigation to confirm business relevance. The system identified statistical indicators for overstocking and understocking situations, though implementation required human judgment about business thresholds.

The analysis that typically required 2-3 days of analyst time was completed in approximately 10 minutes. However, we've learned that human expertise remains critical for domain validation, strategic context, and implementation planning. Business analysts still need to validate whether discovered patterns make business sense. The agent can identify correlations but human judgment is essential for determining causation and business implications. While the agent can suggest what to analyze, translating insights into actionable business processes requires human expertise.

In another case, a colocation housing platform CEO had a specific concern: "I have an assumption that potential new roomies are rejected based on their age. For example, if a room participant is 45 years old, and a household has average age of 25 years old, then I see that there is some discrimination happening where the participant is being rejected by voting. So I want you to do an analysis on how much this happens, where new participants are rejected by votings based on age."

This type of analysis would typically take their team days to complete—if it was feasible at all given competing priorities. The Deep Analysis Agent was able to examine voting patterns, control for factors like location and income, and identify potential age-based discrimination patterns. However, the agent also appropriately flagged when it needed human input about business rules, voting thresholds, and how to interpret edge cases in the data.

Current Challenges and Looking Forward

While Deep Analysis agents for business intelligence show promise, several challenges remain.

The agent's effectiveness is directly tied to data catalogcompleteness and accuracy. Incomplete or outdated catalog information can lead to suboptimal analysis directions. Some domain-specific business rules and exceptions are difficult for the agent to infer automatically, requiring ongoing human guidance and validation. While the agent excels at identifying patterns, distinguishing between correlation and causation, or understanding business context behind statistical relationships, often requires human expertise. Complex enterprise scenarios with unusual data patterns or business requirements sometimes require manual intervention and refinement.

The future of business intelligence involves partnership between AI capabilities and human insight. AI agents can handle the computational complexity and scale of modern enterprise data environments, while human analysts provide domain expertise, strategic context, and business judgment that remain essential for actionable insights.

For data teams considering AI-augmented analytics, the combination of deep research capabilities with catalog-driven context offers a practical path forward—one that enhances human analytical capabilities while respecting the complexities and nuances of real enterprise decision-making.